Table of contents

- Measuring Media Accuracy in the Era of Big Data: Introduction

- The Challenge of Measuring Media’s Veracity

- Measuring Media Accuracy by Linking Event Data to News

- Modeling Media Accuracy

- Frames as Information

- Questions and Caveats (Or Measuring Legacy Journalism Consistency with the Facts. Effective Curation, and Perspective Diversity)

Measuring Media Accuracy in the Era of Big Data: Introduction

By Stuart Soroka

Concern about the accuracy of media content is, it seems, at an all-time high. There are worries about the identification and correction of mis- and disinformation, spurred on in part by instances of false information during the COVID-19 pandemic and disinformation related to the 2020 U.S. presidential election. On a broader level, polling continues to document the public’s declining trust in mainstream news. This declining trust is particularly worrisome in the context of a rise in politically polarized news sources. All of these issues have made it harder for media consumers to know which media outlets to trust, and on what issues. In this sense, media accuracy is less about whether a fact at hand is right or wrong, and more about ensuring that media consumers obtain the basic facts required for informed democratic citizenship.

At the same time, researchers’ ability to capture and analyze media content is stronger than ever before. The vast bulk of news content – from both mainstream and alternative sources – is readily available, digitally, almost instantly. And the tools required to identify and analyze this content improve on a near-monthly basis. We are currently better able than ever before to think about measuring the accuracy of media content – just at a time when concern about media accuracy is especially high.

It is in this context that a group of researchers got together for a one-day conference at UCLA to share ideas about how best to think about measuring media accuracy. We had the benefit of being able to draw not just on recent work on misinformation, but also a long-standing, rich literature concerned with media accuracy more broadly. There have after all been longstanding concerns about the accuracy of foreign affairs coverage, or biases in coverage or framing of the environment or social welfare policy. Our aim was to build on this past work, to take advantage of recent advances in large-scale content analytics, and to consider some conceptual and practical advances in the empirical assessment of media accuracy.

Measuring media accuracy presents considerable challenges. As Leticia Bode’s contribution to this special issue highlights, assessing “consistency with the facts” requires evidence and expertise that are not always available. That said, Robert Bond’s work suggests that it is possible to obtain accuracy estimates through researchers’ own or fact-checking sites’ evaluations of evidence. Kasper Welbers’ paper illustrates how we can link newspaper coverage to established events databases to “analyze how the reality presented in the news systematically distorts reality.” Christopher Wlezien and I match news coverage with macroeconomic data to assess the accuracy of unemployment reporting by US networks. And Amber Boydstun and Jill Laufer illustrate the importance of considering not just simple facts but also “issue frames” in our consideration of media accuracy.

Each of these approaches leverage large bodies of data stemming from content analysis of news coverage. Several of these approaches also find domains in which “objective” measures of reality are available. There clearly are difficulties in assessing accuracy across all news topics—but there are also areas in which (admittedly partial) indicators of media accuracy are increasingly feasible.

Measuring media accuracy—across topics, time, and media outlets—can provide news producers with a valuable assessment of their own work; it can provide scholars of political communication and journalism with the indicators necessary for analyses of the causes and consequences of media (in)accuracies; and it can provide news consumers with valuable information to make decisions about where to get (or not get) their news. For all of these reasons, we hope that the papers included will stimulate a conversation about how to conceptualize and measure media accuracy.

The Challenge of Measuring Media’s Veracity

By Robert Bond

The media has paid substantial attention to misinformation’s role in high-profile events like elections and the pandemic. However, how often do readers encounter media stories that they know in the moment are (or that are later proven to be) completely false? A rapidly growing academic literature on misinformation has largely focused on people’s misperceptions of facts, i.e. when they believe in things that are false or reject belief in things that are true. This focus makes a lot of sense—some fundamental components of our collective ability to effectively participate in a democratic society are dependent on people having a correct and shared understanding of the state of the world. Academics have endeavored to understand where misperceptions come from. There are many possible explanations, but one which has attracted both academic and public attention is the media, or what researchers would call media effects.

The basic story of media effects on misperceptions is straightforward and intuitive. Many people get a substantial amount of information about important matters, like politics, the economy, and the health care system, from the media they consume. If many people base their beliefs on the information provided by the media, then the veracity of the information presented in the media will have a substantial impact on the accuracy of people’s beliefs. In short, if media effects are large, when people consume veracious media content their beliefs will tend to be accurate and when people consume untrue media content their beliefs will tend to be inaccurate.

Researchers have developed several ways to understand whether people’s beliefs are accurate or not. Perhaps the most common approach is to survey people and ask their belief in false or true statements that have known veracity prior to being put on the survey. When people say that they believe false statements are true or that true statements are false, researchers consider them to hold a misperception on that topic. However, understanding whether the media is to blame for misperceptions, or deserves praise for accurate beliefs, entails other challenges.

Fundamental to understanding the media’s role in promoting or hindering misperceptions is understanding when the media itself has presented true or false information. This is a much more challenging thing to understand about the media than it may seem at first blush. Although there are instances in which stories presented in the media have been made up out of whole cloth, these kinds of stories are rare and do not often get substantial attention from the public. Many stories are based to some degree on reality, but important facts have been changed or omitted that make the story unreliable. That is, a typical reader would be likely to come away with a misunderstanding of what happened from reading such a story.

To try to make the above conceptualization of media veracity more concrete, my collaborators and I have often conceptualized accurate media as that which is consistent with the best available facts at the time. Built into this conceptualization are three key components that make identifying accurate media challenging. The first is that media should be consistent or compatible with the facts. That is, the information presented should be factual and representative of the full range of perspectives. For example, a story that says that “scientists believe there is life on Pluto” may be technically true if the producer of that story can find two scientists who have said this. If a story written about this does not contextualize that there are two such scientists with information about the many other scientists who disagree, this story may be likely to lead to a misperception. As such, it may be useful to not only think about information presented in the media as “true” or “false”, but also as misleading. It is likely that misleading stories could be put on a scale from not so misleading to very misleading.

Remember, as I have framed this post, a common goal for researchers is to understand why people hold accurate or inaccurate beliefs. To achieve this goal, and particularly to understand what role the media play in affecting people’s beliefs, understanding when a story is misleading is likely just as helpful, if not more so, than establishing veracity with great precision.

Actually measuring media’s veracity, particularly at a large scale across the wide-ranging and diverse media ecosystem, is a substantial challenge. Current approaches have tradeoffs in terms of what they may tell us about the media environment. One approach is for researchers themselves to read stories and to attempt to verify the information (for example, in this paper). This approach may enable researchers to study individual stories of interest but may be dependent on the information presented being verifiable, and it is very challenging to implement at scale. A second approach outsources the veracity verification process to fact-checking organizations, such as Snopes or Politifact (for example, in this paper and this paper). These organizations dedicate substantial time and resources to verification, but researchers are dependent on stories being chosen by these organizations, which may limit the range of topics that are studied. Finally, researchers sometimes use the reputation of an outlet as a proxy for its reliability. Several organizations (e.g., Media Bias/Fact check, NewsGuard) regularly rate media organizations for their tendency to produce accurate content (for example, in this paper). Although this greatly increases the scale at which accuracy can be measured, these approaches say little about whether individual stories are presenting factual information or not.

New, innovative research approaches are likely key to expanding our understanding of why, when, and how the media present accurate or misleading information to people, and ultimately to what effect on people’s beliefs. Although doing so is likely to be challenging, the understanding we gain is likely to be well worth it.

Measuring Media Accuracy by Linking Event Data to News

By Kasper Welbers

As gatekeepers of information, news media are tasked with filtering and molding a vast and complex daily reality into a comprehensible set of news stories. Citizens need these stories to comprehend the world around them, and thus need to have access to gatekeepers that they can trust to perform this task accurately. Yet, perhaps more than ever, people are divided on who these accurate gatekeepers are. News outlets that some consider the flagships of reporting, are the epitome of fake news to others. Although this rift might in large part be political, it should also entice us to think more deeply about how we can evaluate media accuracy. Is it possible to define it in such a way that different parties can agree on it, and thereby be used to have an empirically grounded debate? And if so, can it be measured at scale, to study media accuracy in today’s vast media landscape?

These are big questions, and in this essay my modest contribution to addressing them is to discuss a potential research design for measuring media accuracy. I will build on a paper in which colleagues and I applied this design to study media coverage of terrorist attacks. As a methodological paper, the focus was mostly on the implementation details, but I believe that the greater contribution actually lies in the more general research design. The goal of this essay is to clarify this design with less technical distractions, and to elaborate on how it might help us in measuring media accuracy.

The research design builds on the idea that gatekeepers can be conceptualized as functions that receive some real-world input (a vast and complex daily reality) and transform it into output (news stories). If we are able to quantify this input and output, then we can identify the function. In our paper, we proposed to quantify both as sets of events. For example, if we define the input as the set of all terrorist attacks, and we analyze the output to see which events are covered, then we can identify the probability that events from different countries are covered. More generally, any information that we have about the events in our input set can be used to analyze how (accurately) the output reflects the input.

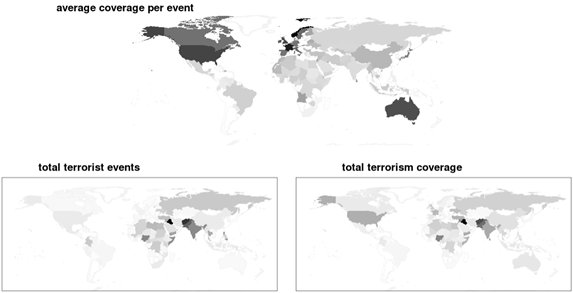

Figure 1. News coverage of terrorist attacks across countries

To conduct this type of analysis we need to overcome two challenges. First, we need to obtain reliable and valid real-world measures of a set of events. This poses limitations to

the application of the approach, because such data is not always available. However, event data is becoming increasingly more common. Digital infrastructures have facilitated the creation of event databases, and these are used in various fields to study patterns in events like terrorist attacks (GTD), disasters (EM-DAT) and political violence (ACLED). At the same time, many elements of modern life are automatically documented through online digital traces. Events can also be social media posts, speech acts in parliament, or even other news publications and news wires. The key criterion for using them to study media accuracy is that we can trace their coverage in the output of gatekeepers.

The second challenge, then, is how to perform this tracing of event coverage. In other words, how to link the items in the input set to the items in the output set. If the input set is very large, it can become quite hard if not impossible to do this manually. One of the primary contributions of our methodological paper was that it presented a computational text analysis solution and open-source tool to use it. The details and limitations of this method are out of the scope of this essay, but also not that important for the overarching argument, which is that modern computational text analysis techniques can help us overcome the challenge of linking the input and output sets.

Once we have linked the input and output, we can start analyzing the gatekeeping function. For illustration, we traced the coverage of events from the Global Terrorism Database in news coverage from The Guardian between 2006 and 2018 across 131 countries. We can now, for example, compare the frequency of actual terrorist events per country to the coverage of attacks in these countries. Figure 1 shows that the total amount of terrorism coverage is a decently accurate reflection of the actual frequency of terrorist attacks. However, when we look at the average amount of coverage per terrorist attack, we see that North America, Europe and Australia are clearly overrepresented in The Guardian.

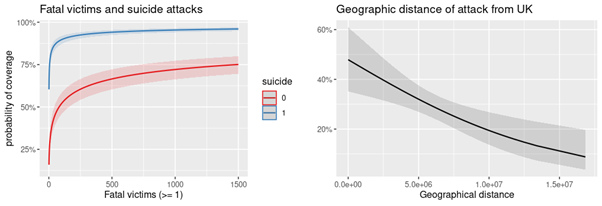

Figure 2. Marginal effects of event characteristics

For a more rigorous analysis, we can fit a multilevel logistic regression model that predicts whether an event was covered (i.e. at least one news item) based on any event characteristics available. For demonstration purposes, we look at several very likely predictors based on news values literature and prior terrorism research, namely the number of victims, geographical distance, and whether it was a suicide attack. Figure 2 visualizes the marginal effects, which reveal the expected pattern that attacks closer to the UK, with many victims and a suicide attacker, are more likely to be covered.

There is much more to say about this particular data, but the key take-away is that by linking event data to news items, we can analyze how the reality presented in the news systematically distorts reality. In some cases, such distortions can be harmful and reveal biases in reporting, and this method can be used to analyze them at a scale that enables comparative and longitudinal analysis. Although not shown here, we could also conduct an additional content analysis of the news items to analyze how it reflects the event in terms of factual accuracy and narrative.

I thus conclude with the suggestion that being able to analyze the output of gatekeepers in light of real-world input seems a promising direction for analyzing media accuracy, and the observation that this type of analysis is increasingly possible. As reality is becoming more-and-more documented in digital repositories, and innovations in text analysis enable us to better mine this information, we have the means to quantify sets of news events on such a scale that we can use them to approximate the real-world input of gatekeepers.

Modeling Media Accuracy

By Stuart Soroka and Christopher Wlezien

In some ways, the modern media environment is novel: there are many more sources of news content than ever before, and their potential audience has increased dramatically with the rise of the internet and especially social media. These changes have also shifted who “gatekeeps” the news, and how much actual gatekeeping there is.

In this context, current worries about mis- and disinformation reflect concerns about accuracy, or the lack of it. Such concerns really are not new, however. There are longstanding literatures focused on inaccuracies in US media coverage of health care, welfare, and foreign affairs, for instance. Recent research indicates that there are issue domains in which media coverage has represented government policymaking relatively accurately, and other domains in which media have been systematically inaccurate—not just for a single bill, but for an entire topic, for years. There are media outlets that are more or less accurate, and there are times when they are more or less accurate. But what exactly is media accuracy? And how can we capture it empirically?

Accuracy in media coverage is, in our view, about the degree to which that coverage reflects reality. It is not always easy to say what that reality is, however. Consider national security: how can we tell we have it and how much of it we have? Reality is in other instances more readily evident. One example is the unemployment rate, which we know from well-established (and readily accessible) objective measures. In this case, given a clear indication of reality, we can use relatively simple content-analytic techniques to assess the accuracy of media coverage.

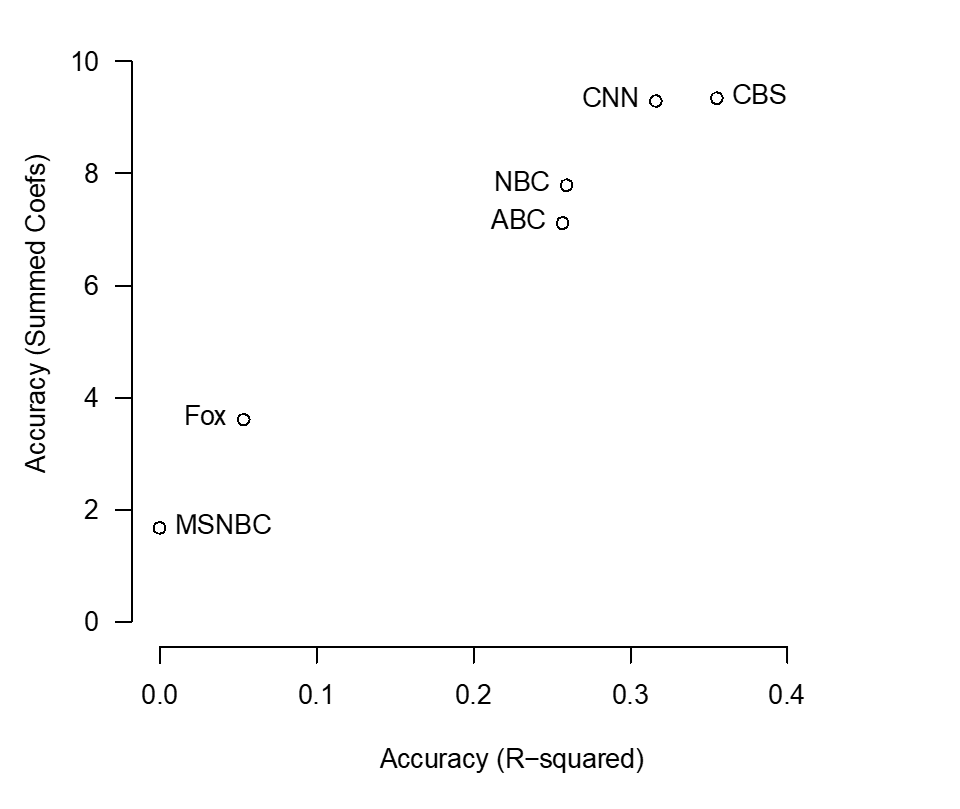

By way of example, the figure shown here illustrates two measures of accuracy in coverage of unemployment for the six major television networks in the US from 1999 to 2020. Results indicate that—by both measures—accuracy is much higher for CNN and the “big 3” broadcast networks, especially CBS, than for Fox and MSNBC. Put differently: a viewer whose information comes primarily from the latter two networks will have a less accurate view of unemployment than will a viewer show information comes primarily from the others. This may have important consequences to the degree perceptions matter for both economic and electoral behavior.

How do we capture the measures of accuracy shown in this figure? Both begin with a relatively straightforward layered dictionary approach to the population of transcripts for all news programs on each of the six networks. This includes the major morning and evening news programs on ABC, CBS, and NBC, and most regular programming on CNN, Fox, and MSNBC. We search for every sentence in these transcripts that mentions employment, jobs, unemployment, or jobless and then search within those sentences for any words indicating upward or downward change. By “layering” these unemployment and direction dictionaries, we are able to capture any sentence that suggests upward or downward movement in unemployment. (Note that we reverse-code sentences about employment and jobs, so that downward signals about employment are matched with upward signals about unemployment.) We then subtract the number of downward sentences from the number of upward sentences every month. This produces a “media signal,” where values above zero indicate coverage suggesting upward movement in unemployment, and values below zero indicate coverage of downward movement in unemployment.

Now, it is not at all clear how to directly measure the accuracy of the signals, as we do not have a yardstick for exactly matching them to the unemployment rate (or other data). What we can do, however, is model those monthly signals as a function of changes in the unemployment rate in the current and preceding months using regression analysis. Because the number of sentences varies across the networks, we standardize each of the signals so they all have the same mean and variance; this allows us to focus specifically on the accuracy of the signals, not the amount of coverage, often referred to as “attention.” (CNN is the main outlier where the volume of coverage is concerned, with four times as much relevant coverage as the other two cable networks, and ten times that of the broadcast outlets.) These regression models produce two estimates of accuracy: one based on the sum of the coefficients for changes in unemployment; and the other based on the R-squared. The former tells us how much effect changes in unemployment rates have on the media signal; the latter tell us how much of that signal is about unemployment rates (as opposed to other things).

As can be seen in the figure, the two estimates are highly correlated. From the sum of coefficients, we can see that unemployment matters more for coverage of some outlets than others. CNN and the three broadcast networks, particularly CBS, produce higher measures of accuracy than do Fox and MSNBC. While we cannot tell what the sum of coefficients should be, we can tell that more is better. And from the R-squareds, we can see that the related signals also are “purer” (closer to 1.0) for the former networks than for the latter ones. To be clear: for the most accurate networks, cover is more reflective of unemployment rates. That said, the fact that all estimates all are below 0.5 reveals that most coverage is not directly related to current or recent unemployment rates, even for the most accurate networks.

These results illustrate ways to assess media accuracy. That is, we can model the relationship between coverage and objective reality where measures of the latter are available. Even in these instances, however, we cannot say much about the right amount of coverage; and our analysis also reveals that most television coverage relating to unemployment does not mostly reflect the recent unemployment rate. Some of the difference surely is measurement error, but there presumably is more to the story. Disinformation is a suspect for some of the variation across networks, but it may be that coverage for all networks reflects various features of reality that provide a more complete picture of the reality of employment and jobs than simple quantitative measures of unemployment (rates) reveal. Intersubjective consensus might help here, and expert judgments too, and this may be especially the case in the many other areas where we cannot objectively reflect reality. These strike us as important subjects for future investigation.

In the meantime, we suggest that measures capturing the strength of the connection between media content and reality are possible, at least in certain domains. Further measures – for the television networks alongside 17 national newspapers, across five major budgetary policy domains – are available at mediaaccuracy.net.

Frames as Information

By Amber Boydstun and Jill Laufer

Contemporary discussions of media inaccuracy often center on social media, where platforms have limited abilities (and limited incentives) to curb misinformation and disinformation. Yet it is just as important to consider media inaccuracy in the context of legacy news outlets. We interpret “media inaccuracy” broadly, to mean media portrayals that do not accurately represent the diverse array of considerations about an issue—considerations that, if they had more thorough information, people would likely want to weigh.

Readers and viewers generally expect accuracy from legacy media in the U.S., outlets like the New York Times, Philadelphia Inquirer, and Wall Street Journal, or nightly national news programs on ABC, CBS, NBC, or PBS. Nearly twice as many Americans trust national news organizations as those who trust social media sites. We argue that this heightened trust means that getting an accurate sense of these organizations’ accuracy media is of primary importance. Inaccuracies that exist in the legacy media can be more dangerous to public trust—and to the normative marketplace of ideas the media is supposed to offer—than media accuracies in any other context.

Arguably part of the reason people tend to trust legacy news outlets is that Americans have a normative idea of them as watchdogs. The public knows news outlets don’t always get it right. Still, they view the legacy press as having the responsibility to surveil the world and then transmit information about what is happening to the public. The normative model does not require that this information be perfect or complete, but it does presuppose that the information is broadly representative of reality.

With this in mind, we propose a broader definition of media accuracy, to encompass not only whether the news is factually correct but whether the news provides a representative snapshot of a news item, be it an event, a policy issue, or a political campaign. Of course, to know whether the media’s portrayal of a news item is representative, we would need to be able to define the “reality” that we hope the media is portraying, and that task is impossible. Yet even though we cannot accurately compare media portrayals of an issue to the issue’s “reality,” it is still worth investigating what the marketplace of ideas for a given issue looks like, as portrayed through the legacy press.

| Table 1. Policy Frames Categories |

| Capacity & Resources |

| Crime & Punishment |

| Cultural Identity |

| Economic |

| External Regulation & Reputation |

| Fairness & Equality |

| Health & Safety |

| Legality, Constitutionality, & Jurisdiction |

| Morality |

| Policy Prescription & Evaluation |

| Political |

| Public Sentiment |

| Quality of Life |

| Security & Defense |

As part of our Policy Frames Project, we collected all U.S. newspaper articles from 13 national and regional newspapers on each of six different policy issues—climate change, the death penalty, immigration, gun control, same-sex marriage, and smoking/tobacco—from 1995 through 2014. We then used machine learning trained on manual annotations to categorize each news article according to the primary frame used in it (see Table 1). For example, a news article talking about crime rates among immigrants would be coded under the “crime & punishment” frame, an article talking about the health risks immigrants face when crossing the US/Mexico border would be coded under the “health & safety” frame, an article about public protests of immigration policy would be coded under the “public sentiment” frame, and so on. Thus, these frames serve to capture the perspectives of different sub-issues within a given issue.

We cannot precisely estimate what an ideal distribution of attention would be across these frames. If the underlying truth of a policy issue should drive how it is framed in the media, that truth likely makes some frames more relevant for some issues. One might argue, for example, that an accurately representative portrayal of smoking and tobacco should focus overwhelmingly on framing the issue from the perspective of health & safety (e.g., news articles about higher cancer rates among smokers). Still, these data give us at least an empirical look at what the marketplace of ideas looks like—how narrow or diverse it is—for each issue. And if news coverage is dominated by a couple of frames, which frames are those?

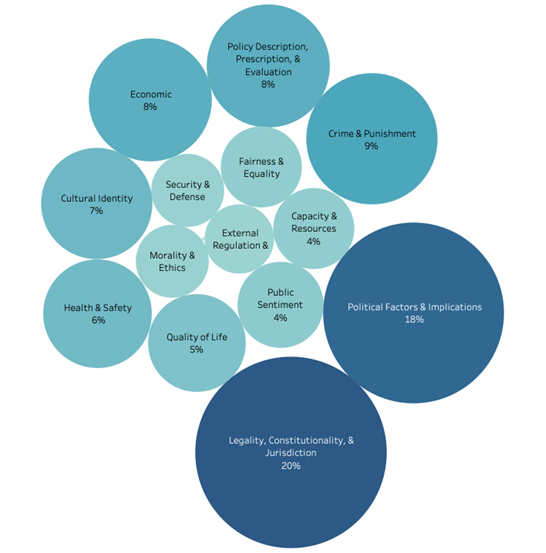

Table 2 shows, for each of our six issues (plus all six issues combined), the distribution of news coverage across all 14 frames, 1995-2014. For each issue, the top three frames are highlighted in yellow. We present this same data in graphic form in Figure 1 for all six issues combined to illustrate the overall distribution of frames conveyed to the public via the US news, at least for these issues.

As seen in Figure 1, two frames tend to feature prominently: political frames and legal frames. Framing issues in terms of politics (e.g., current negotiations or disputes between Republicans and Democrats on the issue) and legality (e.g., recent court rulings related to the issue) should surely be included in any accurate representation of the “reality” of a policy issue. But Table 2 and Figure 1 suggest a thought experiment: if we had omniscient understanding of the reality of a given issue, would political and legal framing belong so prominently in an accurate representation of that reality? Or perhaps another way of asking this question is: Whose reality is being accurately represented in this distribution of frames, and who is that reality privileging?

The dominance of political framing, in particular, should give us pause since research shows that media coverage of party polarization increases people’s beliefs that the country is polarized and heightens people’s distaste for the opposing party. As a parallel, horserace coverage of elections—who is ahead, and by how much—may be accurate, but this red-vs-blue “game framing” can also distract potential voters from deeper considerations that might help them make informed decisions in the ballot box. In all, the graphs here suggest that legacy press coverage of these multifaceted issues is often a skewed presentation that favors some frames over others, not necessarily in line with what the truth of the issue would suggest.

The findings from our project suggest that in fact news coverage of a given policy issue tends to be dominated by only a few ways of thinking about the issue, thus potentially limiting the opportunities for people to consider the issue from many perspectives.

Table 2. The distribution of frames in news coverage by issue, 1995–2014

| Frame | Death Penalty | Gun Control | Immigration | Same-Sex Marriage | Climate Change | Smoking/ Tobacco | All Six Issues |

| Legality, Constitutionality & Jurisdiction | 38% | 15% | 15% | 27% | 3% | 16% | 19% |

| Political Factors & Implications | 6% | 37% | 18% | 29% | 18% | 12% | 18% |

| Crime & Punishment | 16% | 8% | 13% | 0% | 0% | 4% | 9% |

| Economic | 1% | 3% | 7% | 3% | 11% | 21% | 8% |

| Cultural Identity | 4% | 5% | 9% | 6% | 4% | 8% | 7% |

| Health & Safety | 6% | 4% | 4% | 1% | 3% | 16% | 6% |

| Quality of Life | 4% | 1% | 8% | 7% | 5% | 4% | 5% |

| Capacity & Resources | 1% | 0% | 3% | 0% | 26% | 0% | 4% |

| Public Sentiment | 2% | 7% | 5% | 8% | 2% | 2% | 4% |

| Fairness & Equality | 11% | 1% | 2% | 5% | 0% | 0% | 4% |

| External Regulation & Reputation | 2% | 1% | 2% | 0% | 15% | 0% | 3% |

| Security & Defense | 2% | 5% | 5% | 0% | 1% | 0% | 3% |

| Morality & Ethics | 5% | 1% | 1% | 9% | 1% | 2% | 3% |

| Other | 0% | 0% | 0% | 0% | 0% | 0% | 0% |

| Policy Description, Prescription & Evaluation | 4% | 10% | 7% | 4% | 10% | 14% | 8% |

Figure 1. The distribution of frames in news coverage across all six issues combined, 1995–2014

Questions and Caveats (Or Measuring Legacy Journalism Consistency with the Facts. Effective Curation, and Perspective Diversity)

By Leticia Bode

If we’re thinking about media accuracy, we obviously need to think about two things – 1) what constitutes the media, and 2) what does accuracy mean?

Definitions of media are often complicated, but at the workshop at UCLA, research was mainly focused on 1) journalism and 2) in legacy media outlets (eg print and broadcast journalism). That leaves out a lot of mediated content that people consume regularly, but I think it’s a reasonable restriction to make. Still, maybe it’s worth describing this as journalism accuracy rather than media accuracy, for clarity.

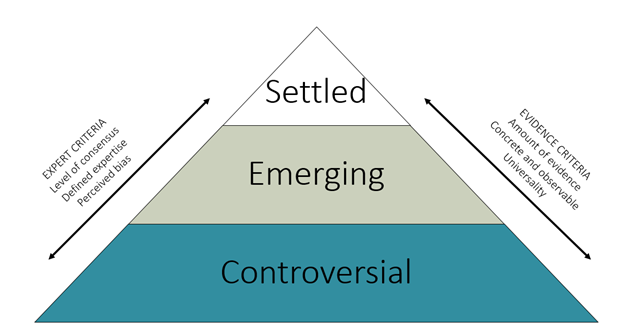

That brings us to the second question, about accuracy. In the context of information, I have previously argued (Vraga & Bode, 2020) that we should consider the combination of two elements when deciding whether a piece of information is true or false: 1) whether there is clear and compelling evidence, and 2) whether there is expert consensus about that evidence. So let’s call this version of accuracy something like consistency with the facts.

Breaking this down into the separate pieces, the first element is evidence. If we don’t have any evidence of something, we can’t be confident in either direction—we don’t know whether something is true or not. The more evidence we have, the more concrete and observable that evidence is, and the more universal it is – that is, the more it seems to apply to lots of different contexts – the more confidence we have in the evidence and therefore in the related information topic.

But in order to evaluate evidence, we need expertise, the second element. Some evidence is complicated, the methods used to produce it are somewhat inaccessible to the average person, so we have to rely on experts to help us evaluate the evidence. In the case of using available evidence to decide whether something is true or false, we ideally want consensus among experts, clearly defined areas of expertise, and as little bias or conflicts of interest as possible among those experts. When expertise is clearly identified, experts are considered relatively unbiased, and they all agree about the evidence, our confidence grows in the truth of any given piece of information.

Figure 1: Defining Misinformation

Courtesy of Vraga & Bode, 2020

But this is not an easy task, so even at the information level, there’s often not enough evidence, experts disagree, or it’s hard to even identify who the relevant experts are to be weighing in on the matter. So relatively few topics rise to the level of “settled” in the pyramid in the figure.

This becomes even more complicated when we’re thinking about the accuracy of journalism – a much bigger, more complicated, and more diverse beast than a single piece of information.

First, there is a difference in evidence. Due to so-called “first-order journalistic norms” – personalization, dramatization, and especially novelty – media tend to cover breaking news as a large percentage of their portfolio (Boykoff & Boykoff, 2007). News is new – it’s right there in the name. Breaking news, by definition, simply will not have as much validated evidence associated with it (Shane & Noel, 2020). This will make any given statement issued by the media an emerging issue (at best) rather than one that fits within settled science – making it more difficult to decide whether it’s true or false.

Second, there is a difference in scope. Rather than a single piece of (mis)information, media stories often cover dozens of claims in just a few minutes or column inches. Assessing the accuracy of those claims, in terms of expert consensus and clear evidence, is of a completely different scale than assessing the veracity of a single statement – just in terms of volume. Malicious actors sometimes use this to their advantage, as Steve Bannon famously said, they “flood the zone with shit” (Stelter, 2021).

But even separately from the difficulties of implementing this definition is considering whether it’s the right one. In order to know how to measure accuracy, we need to decide what we want journalism to do in the first place. This is uncomfortable, because implicit in this question is a normative claim – what should journalism do? What should the goals of journalism be?

One possibility is what I’ve already described – we want the media to only give information that is consistent with the best available evidence at the time that they produce it (Bond).

But maybe, rather than just being accurate we also want the media to effectively represent events that happen in the world (Welbers; Wlezien). So when there is a terrorist attack, or when the unemployment rate goes up, we want the media to let us know. This seems reasonable, but there are also just more events that happen in the world every day than could possibly be covered, even by a 24-hour news channel. So we rely on the media to curate the possible universe of events, into the ones that are the most important. This is where we really get into a sticky spot, because what is perceived as important will vary depending on who you are, where you live, and what your lived experiences are. But perhaps this means we care more about effective curation more than we care about accuracy. A second issue with this way of thinking about accuracy is that we often don’t have a good sense of what the reality the media may or may not be reflecting even looks like. Only rarely do we have an objective and easily measurable sense of reality. If we don’t usually have a set of events to compare media coverage to, how do we know if those events are being accurately portrayed?

Still another possibility is that we care about the media offering different perspectives. In the same way that the media plays a major role in curating information for the public—helping to clarify what is relevant and important—it also plays a major role in framing issues for the public. Part of this is giving background information and context, sometimes thought of as thematic framing (Gross, 2008). But it may also give a sense of the diversity of perspectives that exist on any given issue—maybe we call this perspective diversity. This is really important, but once again we don’t always have a clear reality to compare it to. Should frames be equally distributed? Should they reflect some underlying distribution that exists in the public? If so, how can we gain leverage on what that distribution might be?

What we think is accurate depends on what we think journalism should do. We can measure all of these different pieces—consistency with the facts, effective curation, and perspective diversity—and we should! But whether any of those are reflecting accuracy is a normative judgement that has to be made entirely separately from how we measure things.

References

Boykoff, M. T., & Boykoff, J. M. (2007). Climate change and journalistic norms: A case-study of US mass-media coverage. Geoforum, 38(6), 1190–1204. https://doi.org/10.1016/j.geoforum.2007.01.008

Gross, K. (2008). Framing Persuasive Appeals: Episodic and Thematic Framing, Emotional Response, and Policy Opinion. Political Psychology, 29(2), 169–192. https://doi.org/10.1111/j.1467-9221.2008.00622.x

Shane, T., & Noel, P. (2020, September 28). Data deficits: Why we need to monitor the demand and supply of information in real time. First Draft. https://firstdraftnews.org:443/long-form-article/data-deficits/

Stelter, B. (2021). This infamous Steve Bannon quote is key to understanding America’s crazy politics. CNN. https://www.cnn.com/2021/11/16/media/steve-bannon-reliable-sources/index.html

Vraga, E. K., & Bode, L. (2020). Defining Misinformation and Understanding its Bounded Nature: Using Expertise and Evidence for Describing Misinformation. Political Communication, 37(1), 136–144. https://doi.org/10.1080/10584609.2020.1716500