Abstract

“The others are coming. They are coming to get us, take over our country, colonize us, and replace us. They’re an existential threat.” These types of racist logics are a regular trope around the world. Some governments incorporate this type of messaging into disinformation campaigns, which have ripple effects of unintentional misinformation on social media. None of this is limited to particular national boundaries. Rather, racist threat-based messaging in one country is picked up in another by politicians or ordinary people and spread virally. This is happening in the US, for example, with the American right-wing media amplifying Hungarian prime minister Viktor Orbán’s anti-migrant rhetoric in relation to migration into the US from Latin America. In the case of the US, these globalized racist circuits serve to provide forms of “evidence” through state-sponsored disinformation (e.g., Russian campaigns) and misinformation (often circulated by average people) that label the US as being under attack just like other countries (e.g., COVID-19 is a manufactured Chinese attack propagated by 5G or Chinese restaurants serve tainted wild meats). Similarly, like marginalized minorities in other countries (e.g., Muslims in Hungary), Black Lives Matter (BLM) activists in the US are branded as domestic terrorists. What many often do not realize is that certain tropes that are particularly magnified and have been circulating for a long time. They are reboots that seem new as they take a social media format or even cross to a new platform, as in the Chinese restaurant example. In some ways, this can make the misinformation even more dangerous as average people help racist falsehoods go viral on social media, particularly in smaller, niche platforms with less content moderation oversight. However, stakeholders can watch to see these narratives re-emerging and proactively intervene.

Introduction

Niche social media platforms expose right-of-center individuals to racist misinformation and polarizing race-related content online. In my own research, which explores misinformation circulating on the niche U.S. social media platform Parler and globally on TikTok, I found many examples of Muslims around the world heavily othered and framed as criminals, invaders, and rapists. The perceived Muslim threat (particularly of migrants) in many Western countries has much in common with Renaud Camus’ (2018) “The Great Replacement,” first published in 2011, which positions European countries as ethnically homogeneous, but under threat as populations are being “replaced” by migrants. Camus’ conspiracy theory has been part of a “weaponization” on social media (Cosentino, 2020) and has been particularly mobilized against Muslims, making this framing popular with the right wing in many countries.

As my work highlights, this process takes place not just in the US, but also on a global scale. Mis- and disinformation do not stop at national borders but instead flow between various actors, changing its form to adapt to local conditions but retaining its essence. This globalized misinformation accrues additional valences as it circulates: racist misinformation can link to misogyny, ableism, homophobia, and other prejudices. At the moment, scholars are not always well-equipped to be able to trace these misinformation flows. Scholars need to develop research methodologies that take seriously the global reach of right-wing political movements as well as individual bad actors and their potential for radicalizing center-right people. Journalists, policymakers, and the broader public should be aware of these dynamics as just a handful of bad actors can have outsized impacts in deploying misinformation that targets specific ethnic or racial minority groups.

Other scholars (e.g., Kolko, Nakamura, & Rodman, 2013; Sharma, 2013) have mapped out international circuits/flows of racism. It turns out the transnational flow of racist ideas is not restricted to specific time periods, but is more long-term and enduring. Indeed, racist information flows are a part of media throughout history. They are globalized and they travel further and faster through digital communication media. Much of the work on these racist information flows is well developed but has not been particularly focused on the mis- and disinformation space. Therefore, the notion of globalized racism bundles misinformation and disinformation within a threat-based racist paradigm that draws upon other traditional marginal identities (e.g., gender, sexuality, and ability). Social media has accelerated the globalized propagation of racist narratives and the misinformation and disinformation that they draw in.

In the case of the US, misinformation and disinformation have been used to specifically target ethnic and racial communities. For example, in the 2020 presidential election, Black people in the US were specifically targeted through calls by right-wing actors with untrue claims about voting. Black voters received calls falsely informing them that their personal information contained in mail-in ballots would be used by police to enforce outstanding warrants, that credit card companies could use it to collect existing debts, and that individuals would be added to mandatory COVID vaccine lists (Lerman, 2021; Murdock, 2021). Moreover, certain communities of color are more vulnerable to these types of campaigns than others. This may be due to historical social inequalities (e.g., educational, socio-economic, etc.), language (with groups being targeted sometimes being new immigrants to the US with limited English-language skills), or tentative or illegal immigration status.

Racial targeting in dis-misinformation is not new – it’s a reboot

In the specific case of the COVID-19 pandemic, some right-wing groups blamed Asians and Jews for the coronavirus (Greenblatt, 2020; Oxford Analytica, 2020). Indeed, Asians have had to consider whether wearing face masks would make them targets (Choi & Lee, 2021) and have suffered negative health outcomes due to some of the choices they have made or the undue fear and anxiety being placed on them (Chen, Zhang, & Liu, 2020; Gao & Liu, 2021). Even users with names perceived to be Asian on chat platforms such as 4chan have faced cyber-attacks (Nick, 2021). These types of targeting have had devastating mental and physical impacts on lives not just in the US, but beyond (Vega Macías, 2021).

This may appear as new types of discourse or even new threats. However, targeting Asians is not new and some elements seen today on social media are reboots of the past. For example, in my work studying Parler, I saw posts with old misinformation about Chinese restaurants serving dog meat, part of an older trend of targeting Chinese restaurants. New misinformation on social media during COVID-19 claimed that bat-fried rice was being served at Chinese restaurants (King, 2020). However, these stories were much the same as pre-pandemic anti-Chinese misinformation, just substituted with different meats. A disproportionate number of Chinese restaurants closed during the pandemic (Romeo, 2020). Though seemingly new, misinformation packaged within COVID-19 or other current issues plays on older, existing racist tropes. Moreover, if one’s followers on social media platforms such as Parler are sharing these types of posts, it is received by users as word-of-mouth and can have extensive impacts on individual beliefs and behavior.

Disinformation to discredit progressive movements

Disinformation is also being used by foreign state actors—most notably the Russian government’s Internet Research Agency (IRA)—to further polarize people in the US along racial lines. For example, in a study of an IRA dataset released by Twitter, I found tweets where IRA accounts sought to frame mass shootings in the US as perpetrated by “white male terrorists” ostensibly to inflame contentious arguments, stoke division, and rile angry, emotional, racialized reactions:

“This beautiful 17 month old baby girl, Anderson Davis, was shot in the face with an AR-15 today in Odessa, Texas; 5 were people killed, 21 injured in another mass shooting, in ANOTHER WHITE MALE DOMESTIC TERRORIST ATTACK […]”

This tweet[1], which was posted by @AfricaMustWake (an account with a profile picture of a Black woman wearing a traditional African head wrap), was directly responded to as well as retweeted.[2] Some Twitter users positively responded to the tweet’s framing of white males as domestic terrorists, whereas other users strongly opposed this framing and some argued back in response (e.g., “media paints white people [sic.] as bad [when the] majority of shootings are black people you just don’t hear about them.”)[3] Some also called out the insertion of race into the discourse (e.g., “Injecting #race N2 this discussion is irresponsible & dangerous.…”[4]). These responses make clear that IRA tweets were successful in further polarizing some Twitter users along racial lines.

Disinformation has also been aimed at discrediting activist movements that can bring real empowerment and positive change to Black people in the US. For example, Black Lives Matter was quickly branded through disinformation campaigns as a “thug” movement on platforms including Facebook (Bauerlein & Jeffery, 2021). These types of campaigns push some within communities of color to mobilize against movements, resources, or programs that are designed to benefit their communities. Findings from my own studies of social media posts by individuals from racial and ethnic groups demonstrate this (Murthy, forthcoming).

The repeated use of wedge issues like immigration by these types of campaigns has real power to adversely affect minority communities. In the case of the US, IRA campaigns sought to discourage African-Americans from voting for Hillary Clinton or even voting at all (Bastos & Farkas, 2019). Moreover, the IRA sought to manipulate the hashtag #BlackLivesMatter on both sides of the political spectrum (left-leaning and right-leaning) in order to polarize the two sides further (Arif, Stewart, & Starbird, 2018). This type of content had the potential to draw those on the fence over to one side or another in terms of their perception of Black Lives Matter (Murthy & Kim, 2021). Violence by right-wing extremist groups against Black Lives Matter protesters could have indeed been linked to right-wing social media influencers that were also posting this type of content on social media/

Global circuits: racist mis- and disinformation trends evade borders

Mis- and disinformation are often not self-contained within the borders of a country; themes and tropes common to one political culture can be mapped onto another. We can see this at work in recent events. Some right-of-center Americans’ exposure to European anti-immigrant politics has led them to sympathize with Eastern European anti-immigrant rhetoric and politics. The image common to both the European and U.S. contexts is that of the so-called “migrant caravans” deployed by right-wing media as a way to demonize immigrants as criminals trying to infiltrate the US (Freeman, 2018). For example, right-wing American media such as Fox News, Newsmax, and One America News Network have regularly promoted the “The Great Replacement” conspiracy which is framed as a deliberate, coordinated effort to replace white people with non-Western immigrants.[1] The conspiracy’s proponents portrayed migrants from Syria, Afghanistan, and Africa to Europe as rapists or, at best, coming to steal Europe’s resources (e.g., Cortes, 2021), following efforts in 2018 by right-wing media in the U.S. to say the same about Central American asylum seekers to the United States (Moritz-Rabson, 2018; Perea, 2020). In this case, white nationalists in the U.S. are borrowing from like-minded Europeans, affirming the power of these global circuits.

There is a deep fascination and intellectual connection between some right-wing American intellectuals and far-right leaders around the world. This particularly applies to Hungarian President Viktor Orbán, who is known for promoting extreme rhetoric about immigration (e.g., he has described refugees as “Muslim invaders”) (Smith, 2021), free speech, and many other tropes and touchstones that are popular among the US right-wing. Some on the American right adore Orbán and strongly buy into his “Great Replacement”-inspired narrative that labels migrants as an “existential threat” (Obaidi, Kunst, Ozer, & Kimel, 2021). This rhetoric resonates with U.S. right-wing media and has been adapted into their own threat-based narratives. For example, in August 2021, the immensely popular Fox News presenter Tucker Carlson visited Orbán and made the Hungarian leader highly visible to Americans, who were unlikely to have been familiar with him or far-right European politics. Of course, Carlson never paid heed to the violence against Turks and other immigrant groups who have actually been in Hungary, in some cases for decades. Rather, Carlson specifically helped these transnational right-wing networks by declaring on air that “George Soros hates the United States” (Langer, 2022). Carlson also made a fictitious documentary called “Hungary vs. Soros,” which was widely promoted in the U.S. through Facebook ads (Gogarty, 2022). The documentary provides, in a Fox reporter’s words, “an inside look at the alleged secret war being waged by billionaire investor George Soros” against Hungary and the democratic world more broadly.

Orbán argued that even third-generation Muslims in France were still immigrants and that “we would like Europe to remain the continent of Europeans” (Mudde, 2019). It is unsurprising that Orbán’s misinformation (e.g., about the IMF, Ukraine, and George Soros trying to subjugate European nations) is believed in Hungary, given Orbán’s totalitarian control of media in the country (Haraszti, 2019). However, his focus on framing immigrants as invaders of Europe has resonated with some influential European politicians since 2015, leading to an anti-immigration stance that has been called “The Orbánization of Europe” (Alekseev, 2022). Part of Orbán’s popularity outside of Hungary is a byproduct of a “more transnationally networked political right across Europe and the United States” where the “emerging digital news ecology on the right seeks to link up to the broader information environment across borders” (Heft, Knüpfer, Reinhardt, & Mayerhöffer, 2021). This transnational, networked infrastructure has facilitated the spread of racist misinformation on social media. In European countries, this network has been deployed around wedge issues blaming particular groups (e.g., “migrants”) during Brexit (e.g. “the dissemination of false information on immigration and being ‘flooded’ by refugees” [Hajimichael, 2021] ) or more broadly during immigration debates (e.g., “memes on refugees being ‘fakesters,’ ‘frauds,’ and ‘fiends’” [Hajimichael, 2021] ). Though not all racist lies are part of disinformation, some are, as in both the Brexit and meme examples. For example, Breitbart London pushed a story in 2017 that 90% of asylum-seekers in Austria are welfare recipients and their accompanying tweets imply that is part of a long-term plan to take over Austria (Tomlinson, 2017). This story was then made more pointedly Islamophobic when picked up by niche blogs and sites that portrayed all asylum-seekers in Austria as Muslims and falsely smeared them as freeloaders: for example, Jihad Watch argued that immigration is an existential threat, claiming that Muslims believed it was their right to be on welfare and be supported by non-Muslims (Spencer, 2017). Moreover, racist lies also easily become part of misinformation, when content like “Muslims cheering in Jersey City on September 11, 2001” or “Mexico is sending rapists and murderers to the US” (Heckler & Ronquillo, 2019) is shared widely online.

These types of global racist circuits are hardly new. Much of these transnational flows from Europe to North America and back again are part of colonial and postcolonial configurations. In his framing of the Black Atlantic, Paul Gilroy (1993) argues that racist logics flowed from Europe across the Atlantic and to the colonies as well as back to Imperial centers, which in turn radiated back out to colonies. These ebbs and flows of racist thought were part of the underpinning of slavery and colonialism. Today, the movements are somewhat different and follow what Appadurai (1996) calls “cultural traffic,” made possible by migration, technology, and media. He frames each of these conceptually: “ethnoscape,” “technoscape,” and “mediascape,” respectively (Appadurai, 1996). Though he posits more “scapes,” his basic premise is that cultural formations today are a product of these interactions. Following both Gilroy and Appadurai, the cultural manifestations of far-right discourse in Hungary and many other countries are a product of these “scapes” and their intersections as well as colonial histories that have framed Muslims as others and invaders.

In some cases, there is a disinformation component, where governments are consciously engaging in racist campaigns from which misinformation is a byproduct passed on by non-influencers and non-politicians, again through an interaction of mediascapes and technoscapes. Some mainstream platforms, like Twitter pre-Elon Musk, have responded in a variety of countries and contexts to mis- and disinformation. Generally, they have engaged in more aggressive content moderation and even de-platforming in countries outside the U.S., given relatively lax American laws that protect racist and violent speech (Breckheimer, 2001). Right-wing users—in the US and globally—have found that they no longer have a space to operate completely without scrutiny or sanction, and so they have moved to niche “free speech” platforms such as Parler, Gab, and Gettr. Ultimately, without moderating counter-narratives, it can become quite easy for right-of-center individuals to become radicalized on non-mainstream apps with little content moderation through continual exposure to messages promoting violence and extremism.

Technological affordances of platforms expose right-of-center users to endemic racism

While scholars continue to study niche platforms, there remains a real dearth of race-related research within these platforms, which is the focus of my work. The lack of research focused on race is particularly concerning as “[t]he content circulating in these spaces [Parler, Gab, etc.], then, often oscillates between reasoned hyper-conservatism and more strident incitements to violence, between barely-suppressed racism and explicit racial slurs…” (Munn, 2021). Moreover, some racist mis- and disinformation being incubated on these platforms has been tremendously effective in harming ethnic and racial minorities in the US and abroad.

For example, Parler, a U.S.-focused platform that has historically been home to de-platformed users, was itself de-platformed by Amazon for its central role in the violent January 6, 2021, U.S. Capitol Insurrection (Munn, 2021). Though the platform went offline due to this and searched for new hosting providers, it has now back online and is in the process of being acquired by Ye (formerly known as Kanye West), who was de-platformed due to anti-Semitic comments on Twitter and Instagram.

In my own work studying Parler (Kolluri, Kolluri, Venkatesh, Vinton, & Murthy, 2023), I have found cases where individuals who had no apparent interest or affiliations with racist militias (e.g., the Boogaloo movement) or other extreme groups replied to or engaged with extreme racist content. My method to trace the evolution of racist misinformation on Parler is to identify and measure information cascades, which are a self-reinforcing process that causes a chain reaction or snowball effect wherein people repeat an action that others have previously done or adopt a belief that others hold through the observation of others. In other words, people believe something must be true because everyone is saying it’s true (Gieseller, 2004). Information cascades have been established as a “crucial step in gaining a better understanding of how information spreads” (Bishesh, 2022) and have been used successfully to study large volumes of diffusion on online platforms such as Twitter (Hui, Tyshchuk, Wallace, Magdon-Ismail, & Goldberg, 2012). On social media, information cascades can be created by reposting a message or engaging with a post (e.g., liking, favoriting, etc.) merely because others have done so. Indeed, messages being shared in cascades on social media may even be illogical, untrue, or recognizably sensationalized, but are shared and engaged with in high volumes nonetheless. The compulsion to follow the crowd (Lee, Hosanagar, & Tan, 2015) holds true online as well (Shang, Hui, Kulkarni, & Cuff, 2011).

In the case of Parler, we identified single Parler posts containing original racist content and then traced their echoes (Parler’s equivalent of retweeting). The posts are a reflection of the fact that an information cascade can draw eyeballs to its content, ultimately leading to a situation wherein more centrist users are drawn to echo extreme content. Through this approach, our work found that not only can individual Parler users have an outsized impact on influencing “average” users, but they can do it quite quickly.

Parler’s influencers also post content that could radicalize centrist people over to more extreme positions, including those supporting physical violence against marginalized ethnic and racial communities. For example, in the case of COVID-19, Asian people were framed as causing the pandemic (Miyake, 2021) and this provided a strawman distracting from necessary public health interventions like masking mandates, social distancing, and periodic testing. Some Parler influencers tended to use wedge issues such as COVID-19-related health policies (especially promoting anti-masking). As the broader research has found, masking became a modality to “escalate racial discriminations and violence against Asian immigrant groups” (Choi & Lee, 2021).

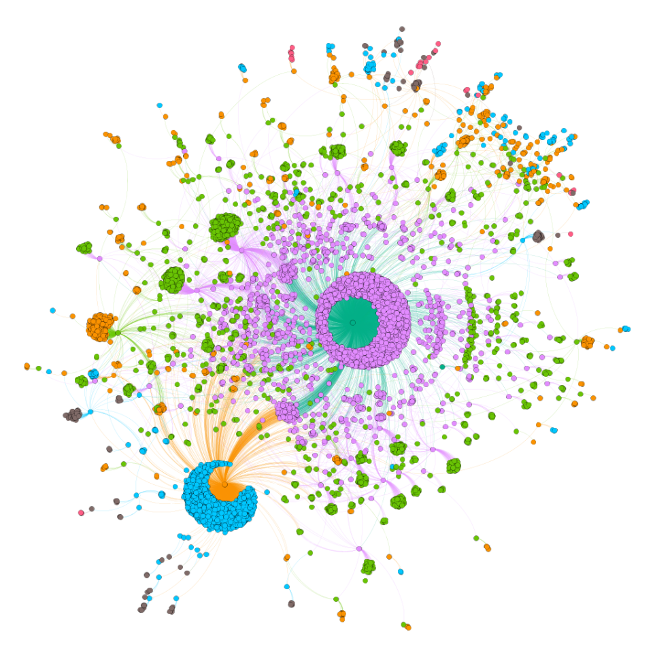

When we studied specific racist information cascades, we noticed some posts deployed a bevy of racist hashtags as a means to foment further racist discourse (e.g., #hitlerwasright) (Kolluri et al., 2023). Ultimately, we found that racist content spread to many corners of Parler through complex networks. Figure 1 illustrates the example of a specific cascade about the controversial hiring of Sarah Jeong to the editorial board of the New York Times. Jeong was heavily critiqued online in August 2018 for vitriolic tweets she posted in response to racist Internet trolls. Jeong’s caustic tweets “included examples of some of the hate speech she said had been directed at her online, including racist slurs,” but directed toward white people. The cascade’s initial post from April 2020, nearly two years after the controversy, is represented as a green circle at the center of the purple ball of posts in Figure 1. In it, a Parler user branded Jeong as anti-white and racist toward white people. The post also argues that Jeong hates “Jews and support[s] Islamist terrorists who want to commit genocide against Jews and Christians” and that Democratic lawmakers such as Ilhan Omar and Rashida Tlaib are Islamic terrorists by including #JihadSquad and #TerrorSquad with their names.

Figure 1: Example of a Parler Cascade that Spawns Islamophobic Posts

From this initial seed post, the cascade’s content increasingly becomes more derogatory and Islamophobic as it traverses through the network where every small circle represents a post. The initial post about Jeong leads to engagement around that post but eventually snowballs into an Islamophobic cascade that includes, ad hominem attacks on Jeong calling her a “Muslim Terrorist,” posts that reference “genocidal Islamists in our congress,” and posts calling Muslims parasites who have “suck[ed]” Europe “dry, [and] now they’re on their way to the United States!!!.” This iteration of posting eventually leads to connections with the global circuits of racism around the “great replacement” theory, which frames Muslims as invaders that threaten Western civilization. These types of posts enable the cascade to further develop, and even spawn a following cascade that is represented by a single derogatory post at the center of the light blue “ball” of posts in Figure 1.

The dynamic of information cascades may be heavily influenced by automated campaigns, which can have an outsized impact on the spread of malicious content. My work has found that some of the users that have successfully generated long information cascades were bots, not human users. Specifically, of the roughly 38,000 Parler accounts with racist content, approximately 5% were bots. Leveraging automation is unsurprising as highly charged words can drive increased virality. This indicates that intentional campaigns were conducted via automated accounts to influence the discourse (racist and otherwise) on Parler.

For those trying to understand this dynamic on Parler and other platforms, research methods will need to be developed to identify bots prior to generalizing the role of human actors in the propagation of extreme content of all types, not just racist content. In our own work, we traced cascades using some of the most offensive racist slurs in Europe and the U.S. Though a small number of Parler users used, for example, the n-word, its use had the highest likelihood of leading to the longest information cascades. Users also combined multiple types of racist messaging, most often with references to Black Lives Matter (BLM). One highly influential user who created significant anti-Black information cascades posted content such as this on the platform, “[…] blm wants a black republic with only blacks like haiti …god luck with that f*ing maniacs !!!” along with 10 hashtags (including #whitelivesmatter). The same user also posted “wtf???come back to your muslim hole […]” and “[…] i m learning arab i have something to say to blm muslim bastards.” As the last two examples highlight, anti-Black content is merged with Islamophobic content. This fusion results in archetypal content that helps fuel the international circuits of racist content and ideas. In other words, the development of these racist cascades serves as a type of proving ground, where, if they get picked up within Parler, they gain visibility and that visibility crosses over to news media, other platforms, and, ultimately, international reach.

The link this user draws between a pro-Black—but largely demonized on the political far-right—social movement in the United States and anti-Muslim sentiment follows the tactics of the Russian Internet Research Agency (IRA), a Russian-backed company based in St. Petersburg, which is best known for its proven role in using fake accounts and disinformation to manipulate the 2016 US presidential election and the 2016 British European Union exit referendum (BREXIT) (Bastos & Farkas, 2019; Dawson & Innes, 2019). Specifically, the IRA used Black Lives Matter as a wedge issue in the 2016 U.S. presidential election in order to further polarize U.S. voters by amplifying content that portrayed BLM activists as having an “exuberant,” “uncompromising[,] and adversarial stance towards law enforcement” (Arif et al., 2018). However, unlike the IRA campaign which sought to portray BLM activists as anti-white, we found many Parler posts that position BLM as extremist and even as a vehicle for domestic terrorism, e.g.:

“[…] BLM is a domestic terrorist Marxist group. […]”[5]

“blm terrorist group !!!#parler #wwg1wga #maga #kag #newuser #news #trump2020 #trump #america #qanon #qarmy #areqawake #patriots #keepamericagreat #thegreatawakening #stopcensorship #freedom #freespeech #teamtrump #republican #democrats #joebiden #antilgtb #whitelivesmatter #police #bluelivesmatter #christian follow,echo and vote please”

What these examples highlight is that the racist content on Parler is much more extreme than the content propagated by the IRA on mainstream platforms such as Twitter. On Parler, highly inflammatory rhetoric like labeling BLM as a “domestic terrorist Marxist group” is more common. Moreover, a large number of hashtags are mobilized to further the connected nature of these posts. Indeed, most of the second post consists of hashtags. Besides furthering the acquisition of a broader audience on the platform, the use of these hashtags has value in cementing partisan identity. As Mason argues, partisanship is more about identity than a tabulation of political views (Mason, 2018). Mason therefore argues that people then adopt the views of others when they see them as identity peers. This is also why we used information cascades as a tool to measure the development of ideas: cascades don’t measure a buy-in to what is retweeted, per se. Rather, a user’s participation—or indeed being swept into a cascade—may be more about identifying with a political tribe rather than a deep engagement with content.

What should the field be focusing our energies on?

Democratic institutions and society would benefit if journalists worked to uncover influencers on more niche, extreme platforms and highlight their reach and impact. Furthermore, it is of critical importance to identify the role state-based actors might be playing in automated disinformation campaigns. Scholars can help with this work if they actively study niche platforms both as they are created and also longitudinally as they evolve; for example, it remains to be seen what the acquisition of Parler by Ye will do to the platform. If one logs on to Parler out of curiosity, they are likely to see a partisan, self-selecting group, as a study of Gettr indicated of left-leaning people who logged onto the platform to “see what the ‘other’ side thinks” (Sharevski, Jachim, Pieroni, & Devine, 2022).

Ultimately, it is important for us to know that platforms like Parler, though fringe, have real and large impacts on mainstream media discourse in both social media and traditional media. Furthermore, these platforms reinforce global circuits of misinformation that amplify the positions taken by extreme-right politicians and political groups around the world and help foster connections between them. A major point of Parler has been to carve out a “safe space” for conservatives to talk with people who are like them and post content that would get them de-platformed from mainstream apps, though that may change on Twitter post-Elon Musk’s acquisition. Niche platforms tend to engage the curious; for example, a study of Gettr found that right-leaning users who had received “nasty remarks” over content they “did not consider controversial” joined Gettr as a result. Therefore, we can assume a certain amount of allure to far-right, niche social media platforms such as Parler is users feel that they are being targeted in more mainstream platforms. Then, when they become active on niche platforms, they may be susceptible to more extreme ideas, which is then accelerated by influencers gaining greater reach through Parler’s content discovery algorithms.

One of the key problems that this reflection is trying to highlight and open debate on is the susceptibility of already marginalized racial, ethnic, and religious minorities globally to misinformation and disinformation that is specifically targeting them. One specific thing that we should reflect upon far more is how racist misinformation and disinformation have become endemic within various social media spaces. Ultimately, the content that appears on niche, right-wing platforms like Parler does not exist in a vacuum and does not merely involve individual actors. Rather, in the case of the IRA and their manipulated branding of #BlackLivesMatter as a violent “thug” vigilante movement, prominent right-wing figures like former U.S. President Donald Trump mirrored these messages. Therefore, internet radicalization on platforms like Parler bleeds into politics and vice versa. In the case of BLM, this continues to have real consequences for Black activists today, who may be targeted by right-wing groups who believe them to be domestic terrorists. Global iterations of this type of circuit also urgently need more scholarly attention. As examples in this essay have shown, people in Hungary or the U.S. who were on the fence or more centrist may become politically radicalized in the face of persuasive, endemic racist misinformation and disinformation. It is important for the field also to expend our energies on studying smaller global niche platforms to spur higher levels of platform accountability. It is important now more than ever for academic researchers, think tanks, government agencies, and others to critically question what is happening on these smaller, yet potentially more dangerous social media platforms.

References

Alekseev, Alexander. 2022. “‘It Is in the Nation-State That Democracy Resides’: How the Populist Radical Right Discursively Manipulates the Concept of Democracy in the EU Parliamentary Elections.” Journal of Language and Politics 21 (3): 459–83. https://doi.org/10.1075/jlp.20022.ale.

Appadurai, Arjun. 1996. Modernity at Large : Cultural Dimensions of Globalization. Public Worlds 1. Minneapolis, Minn.: University of Minnesota Press.

Arif, Ahmer, Leo Graiden Stewart, and Kate Starbird. 2018. “Acting the Part: Examining Information Operations Within #BlackLivesMatter Discourse.” Proc. ACM Hum.-Comput. Interact. 2 (CSCW): Article 20. https://doi.org/10.1145/3274289.

Bastos, Marco, and Johan Farkas. 2019. “‘Donald Trump Is My President!’: The Internet Research Agency Propaganda Machine.” Social Media + Society 5 (3). https://doi.org/10.1177/2056305119865466.

Bauerlein, Monika, and Clara Jeffery. 2021. “Why Facebook Won’t Stop Pushing Propaganda.” Mother Jones, March 2021. https://www.motherjones.com/politics/2021/08/why-facebook-wont-stop-pushing-propaganda/.

Bishesh, Bithika. 2022. “Cascading Behavior: Concept and Models.” In Social Network Analysis: Theory and Applications, 175–203. New York: Wiley.

Breckheimer, Peter. 2002. “A Haven For Hate: The Foreign and Domestic Implications of Protecting Internet Hate Speech Under the First Amendment.” Southern California Law Review 75: 1493–1529.

Chen, Justin A., Emily Zhang, and Cindy H. Liu. 2020. “Potential Impact of COVID-19–Related Racial Discrimination on the Health of Asian Americans.” American Journal of Public Health 110 (11): 1624–27. https://doi.org/10.2105/ajph.2020.305858.

Choi, Hee An, and Othelia EunKyoung Lee. 2021. “To Mask or To Unmask, That Is the Question: Facemasks and Anti-Asian Violence During COVID-19.” Journal of Human Rights and Social Work 6 (3): 237–45. https://doi.org/10.1007/s41134-021-00172-2.

Cosentino, Gabriele. 2020. “From Pizzagate to the Great Replacement: The Globalization of Conspiracy Theories.” In Social Media and the Post-Truth World Order: The Global Dynamics of Disinformation, edited by Gabriele Cosentino, 59–86. Cham: Springer International Publishing. https://doi.org/10.1007/978-3-030-43005-4_3.

Dawson, Andrew, and Martin Innes. 2019. “How Russia’s Internet Research Agency Built Its Disinformation Campaign.” The Political Quarterly 90 (2): 245–56.

Ekman, Mattias. 2022. “The Great Replacement: Strategic Mainstreaming of Far-Right Conspiracy Claims.” Convergence 28 (4): 1127–43. https://doi.org/10.1177/13548565221091983.

Gao, Qin, and Xiaofang Liu. 2021. “Stand against Anti-Asian Racial Discrimination during COVID-19: A Call for Action.” International Social Work 64 (2): 261–64. https://doi.org/10.1177/0020872820970610.

Gieseller, Steven Geoffrey. 2005. “Information Cascades and Mass Media Law.” First Amendment Law Review 3 (2): 301–34.

Gilroy, Paul. 1993. The Black Atlantic : Modernity and Double Consciousness. Cambridge, Mass.: Harvard University Press.

Greenblatt, Jonathan A. 2020. “Fighting Hate in the Era of Coronavirus.” Horizons: Journal of International Relations and Sustainable Development, no. 17: 208–21.

Hajimichael, Mike. 2021. “Social Memes and Depictions of Refugees in the EU: Challenging Irrationality and Misinformation with a Media Literacy Intervention.” In The Epistemology of Deceit in a Postdigital Era: Dupery by Design, edited by Alison MacKenzie, Jennifer Rose, and Ibrar Bhatt, 195–212. Cham: Springer International Publishing.

Haraszti, Miklós. 2019. “Viktor Orbán’s Propaganda State.” In Brave New Hungary: Mapping the System of National Cooperation, edited by János Matyas Kovács and Balazs Trencsenyi, 211–24. Lanham: Lexington Books.

Heckler, Nuri, and John C. Ronquillo. 2019. “Racist Fake News in United States’ History: Lessons for Public Administration.” Public Integrity 21 (5): 477–90. https://doi.org/10.1080/10999922.2019.1626696.

Heft, Annett, Curd Knüpfer, Susanne Reinhardt, and Eva Mayerhöffer. 2021. “Toward a Transnational Information Ecology on the Right? Hyperlink Networking among Right-Wing Digital News Sites in Europe and the United States.” The International Journal of Press/Politics 26 (2): 484–504. https://doi.org/10.1177/1940161220963670.

Hui, Cindy, Yulia Tyshchuk, William A. Wallace, Malik Magdon-Ismail, and Mark Goldberg. 2012. “Information Cascades in Social Media in Response to a Crisis: A Preliminary Model and a Case Study.” WWW ’12 Companion, 653–56. https://doi.org/10.1145/2187980.2188173.

King, Michelle T. 2020. “Say No to Bat Fried Rice: Changing the Narrative of Coronavirus and Chinese Food.” Food and Foodways 28 (3): 237–49. https://doi.org/10.1080/07409710.2020.1794182.

Kolko, Beth, Lisa Nakamura, and Gilbert Rodman. 2013. Race in Cyberspace. London: Routledge.

Kolluri, Akaash, Nikhil Kolluri, Pranav Venkatesh, Kami Vinton, and Dhiraj Murthy. 2023. “Quantifying the Spread of Racism Online: A Comparative Study of Parler and Twitter.” Presented at the Comparative Digital Political Communication: Comparisons across Countries, Platforms, and Time, Toronto, CA, May.

Langer, Armin. 2022. “Dog-Whistle Politics as a Strategy of American Nationalists and Populists: George Soros, the Rothschilds, and Other Conspiracy Theories.” In Nationalism and Populism, edited by Carsten Schapkow and Frank Jacob, 157–87. Berlin: De Gruyter.

Mason, Lilliana. 2018. Uncivil Agreement: How Politics Became Our Identity. Chicago University Press.

Miyake, Toshio. 2021. “‘Cin Ciun Cian’ (Ching Chong): Yellowness and Neo-Orientalism in Italy at the Time of COVID-19.” Philosophy & Social Criticism 47 (4): 486–511. https://doi.org/10.1177/01914537211011719.

Moritz-Rabson, Daniel. 2018. “Migrant Caravan: Fox News Uses ‘Non-White People’ To Scare White People To Vote for Republicans, MSNBC Host Says.” Newsweek, October 26, 2018. https://www.newsweek.com/fox-using-migrants-scare-white-people-scarborough-1188825.

Mudde, Cas. 2019. “The 2019 EU Elections: Moving the Center.” Journal of Democracy 30 (4): 20–34.

Munn, Luke. 2021. “More than a Mob: Parler as Preparatory Media for the U.S. Capitol Storming.” First Monday 26 (3). https://doi.org/10.5210/fm.v26i3.11574.

Nick, I. M. 2021. “In the Name of Hate: An Editorial Note on the Role Geographically Marked Names for COVID-19 Have Played in the Pandemic of Anti-Asian Violence.” Names 69 (2).

Obaidi, Milan, Jonas Kunst, Simon Ozer, and Sasha Y. Kimel. 2022. “The ‘Great Replacement’ Conspiracy: How the Perceived Ousting of Whites Can Evoke Violent Extremism and Islamophobia.” Group Processes & Intergroup Relations 25 (7): 1675–95. https://doi.org/10.1177/13684302211028293.

Oxford Analytica. 2020. “Extremist Groups Will Use Pandemic to Advance Agenda.” Emerald Expert Briefings oxan-db (oxan-db). https://doi.org/10.1108/OXAN-DB252341.

Perea, Juan F. 2020. “Immigration Policy as a Defense of White Nationhood.” Georgetown Journal of Law & Modern Critical Race Perspectives 12 (1). https://doi.org/10.2139/ssrn.3544340.

Shang, Shang, Pan Hui, Sanjeev R. Kulkarni, and Paul W. Cuff. 2011. “Wisdom of the Crowd: Incorporating Social Influence in Recommendation Models.” In 2011 IEEE 17th International Conference on Parallel and Distributed Systems, 835–40. https://doi.org/10.1109/ICPADS.2011.150.

Sharevski, Filipo, Peter Jachim, Emma Pieroni, and Amy Devine. 2022. “‘Gettr-Ing’ Deep Insights from the Social Network Gettr.” arXiv. https://doi.org/10.48550/arXiv.2204.04066.

Sharma, Sanjay. 2013. “Black Twitter? Racial Hashtags, Networks and Contagion.” New Formations 78 (78): 46–64. https://doi.org/10.3898/NewF.78.02.2013.

Siddiqui, Sophia. 2021. “Racing the Nation: Towards a Theory of Reproductive Racism.” Race & Class 63 (2): 3–20. https://doi.org/10.1177/03063968211037219.

Sinnenberg, Lauren, Alison M. Buttenheim, Kevin Padrez, Christina Mancheno, Lyle Ungar, and Raina M. Merchant. 2017. “Twitter as a Tool for Health Research: A Systematic Review.” American Journal of Public Health 107 (1): e1–8.

Thomas, Judy L. 2020. “Far-Right Extremists Keep Showing up at BLM Protests. Are They behind the Violence?” The Kansas City Star, June 16, 2020. https://www.kansascity.com/news/local/article243553662.html.

Vega Macías, Daniel. 2021. “The COVID-19 Pandemic on Anti-Immigration and Xenophobic Discourse in Europe and the United States.” Estudios Fronterizos 22.

[1] https://twitter.com/AfricaMustWake/status/1168129741649522688

[2] https://twitter.com/ReaperKat/status/1168152194954211328

[3] https://twitter.com/kcsdmt/status/1168284395922497536

[4] https://twitter.com/PegasusBoat/status/1168142312364564481

[5] I have redacted the sentences before and after the quoted sentence of the first post as their extreme racist speech is not needed to illustrate my points.